Will we trust autonomous cars? And how can they trust us?

Photo: Fotolia

Photo: Fotolia

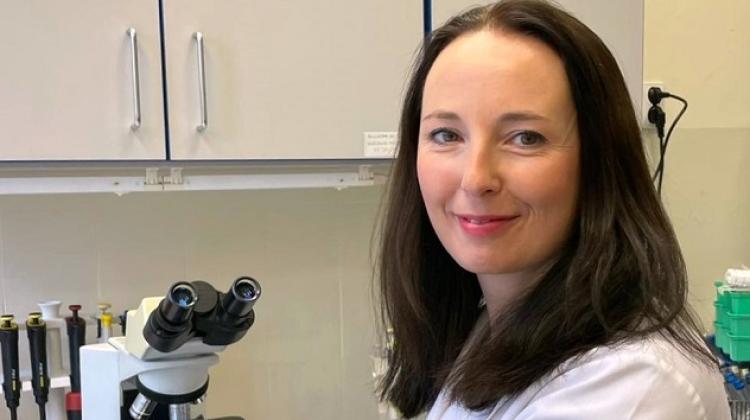

Trust is paramount in the design of autonomous cars. How much can we trust the machine? And should it always trust the driver? These issues are the subject of research of Prof. Marta Kwiatkowska from Oxford.

Would you trust an autonomous car enough to send your children to school in it? The issue of trust is an important problem that manufacturers of autonomous cars must be face. Cars that are controlled by algorithms. This subject is being studied by Prof. Marta Kwiatkowska, Polish researcher from the University of Oxford. She presented her research at the conference Science: Polish Perspectives in Cambridge.

Currently available solutions (such as Tesla autopilot) still require driver presence and control. However, some drivers are close to panic when they are driving with autopilot on. They definitely lack confidence in the machine. But there are also those who - against the manufacturer\'s instructions - doze off behind the wheel, for example in a traffic jam on the highway, leaving all control to the algorithms.

Prof. Kwiatkowska adopted the definition that trust is the willingness of one party - the trusting party - to be exposed to the effects of actions of the other party. Trust is based on the conviction that the other party will perform a specific action, important for the trusting party, even if the other party is not being observed or controlled.

She talked about the dilemmas that sooner or later will have to be addressed when writing algorithms for autonomous vehicles. When there is a large group of pedestrians on the road, and the car has no chance of braking, should it sacrifice its passenger\'s life and, for example, turn and hit a tree? And what if there are two options - hit one person or a few? When such situations happen in life, driver must make a decision in a fraction of a second. There is no time to think. The author of the algorithm will have to take such eventualities into account.

In life there are people who are willing to sacrifice their lives to save others, but also those who would not choose to take such a step. "Which car would you rather drive - one that sacrifices the passenger or one that guarantees the passenger\'s safety?" - asked Prof. Kwiatkowska. She added that it is also a problem for the manufacturer, who should protect passengers from danger.

Another problem associated with trust is that the car - with potentially infinite input data - may make mistakes. It cannot be excluded that, for example, the machine misinterprets the signals from cameras. "Sometimes one pixel can change the image classification" - said Kwiatkowska. She explained that such a small error could mean that the car interprets the red light as green.

The researcher also drew attention to the question of machine trusting the driver. "People can really be bold" - she commented. Therefore, we should consider in which situations the car should not trust the driver. She explained that sometimes the drivers test the car and give it foolish commands. How much should a car trust its passenger?

Prof. Kwiatkowska said that the next step - in terms of people\'s confidence in machines - will require combined efforts of engineers, social scientists and those who influence the shaping of policies.

The goal of the conference Science: Polish Perspectives, which took place on 3-4 November, was to integrate the community of Polish scientists working abroad. Science in Poland was one of the media sponsors of the event.

Author: Ludwika Tomala

PAP - Science in Poland

lt/ ekr/ kap/

tr. RL

Przed dodaniem komentarza prosimy o zapoznanie z Regulaminem forum serwisu Nauka w Polsce.